Motivation

With the increase in the reach of the world wide web, the online presence and footprint of the average person has increased significantly. This footprint ranges from content on social media to libraries on music apps. An important question, however, is what happens to the digital information after the owner's passing away? Owners do not have the same control over their digital property as they do over over their tangible property. There also do not exist any clear laws about the posthumous passage of the former the way they do about the latter. Therefore, the aim of this project was to find a solution for this problem and evaluate its efficacy.

Team and Duration

I undertook this 4 months long project as a part of a four member group. We also received bi-weekly feedback from our lecturers (two Fraunhofer employees) who took on the role of project stakeholders. Although I was a part of the entire process including the design of the solution, my main contributions were towards user research, i.e., choosing research methodologies and designing, conducting and analysing research.

Key Objectives

Our objectives were to design a user-centric solution that:

Let's owners specify exactly what happens to their online assets after they pass away

Deal sensibly with a sensitive and depressing topic such as this one

Take care of the user‘s privacy concerns, confidentiality, trust and full non-disclosure

Tackle legal issues related to this area

Disclaimer: I am unable to share our findings in this case study as I have signed a Non-Disclosure Agreement with Fraunhofer Institute of Technology. However, I am happy to share the process we followed and some example deliverables. Any interest in furthering / owning this project may be taken up with FIT.

Tools

Brainstorming and mind mapping

Online survey

Scenarios and personas

Competitive analysis

Volere Template for system requirements

Task model --> Documentation and verification of user needs and requirements

Storyboards

Paper prototype

Design studio

Medium fidelity prototype using Balsamiq

Usability testing

AttrakDiff questionnaire

Process

Initialisation Phase

The aim of this phase was the initial generation of ideas and identification of potential user groups.

Brainstorming

We started by firstly brainstorming together as group. These were ideas related to every aspect of a potential digital testament, like problem areas, possible users, potential issues we could run into and so on. This phase rather covered the breath of the issue at hand than the depth. We documented our ideas on sticky notes and structured them visually into groups and cateogries. This gave us a first idea of what sort of information we would want to find out from potential users.

User Research

Our next step was to identify our user groups. For this purpose, we firstly started with some internet research and talking to people generally about digital assets. Based on this we made initial assumptions on what our user groups would look like. We then decided to carry out an online survey in the form of a questionnaire to identify our user groups and their needs. The survey helped us achieve these goals and also negated the earlier assumptions we had made, which was an eye-opener! With N = 114, we knew it made sense to focus on what the participants were saying and believe in the findings we achieved through analysing the data.

Through the survey, we identified:

Potential need for this solution

User groups

We documented these findings in the form of:

Scenarios

Personas

Based on the potential need, we came up with potential usage scenarios to define the context of use of such a solution. Based on the user groups, we created user personas to define archetypical users and their goals. This included:

4 inclusionary personas

2 exclusionary personas

An example persona created using Xtensio. Certain parts of the image have been blurred out intentionally.

By the end of this phase, we had also decided on one of the user groups as our focus group. Therefore, our research now had a direction.

Requirements Phase

In this stage, our aim was to clearly define user needs and requirements. We started by summarising our findings up to this point in the form of a concrete design problem before moving on to other steps.

Competitive Analysis

We performed an exhausted competitive analysis of 44 websites and apps that somehow helped the posthumous management of digital assets. We grouped them into three major categories and analysed them based on their regional reach, contextual scope, UI design, their method of handling legal nuances, subscription costs etc. This helped us:

Size up the competition

Observe what they were doing right

Observe where spaces of opportunity existed

A sample summary of one website based on our analysis

Context of Use

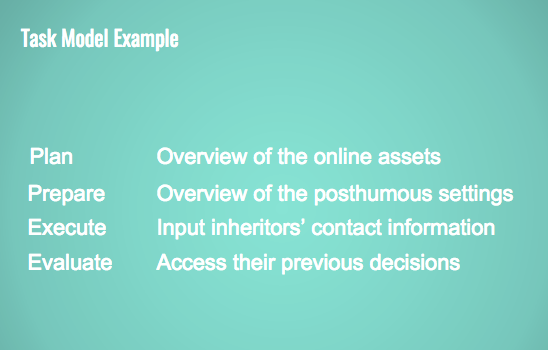

To round up the context of use, we also visualised our task models which described the specific use cases for the multiple roles our users would be allowed to take on. We choose a web based application to realise our solution, where the internet would be our environment and the computers / mobile devices would be our tools. Therefore, our context of use consisted of:

User profiles (personas)

User goals

Use cases

Tools and environment

User Needs, User Requirements and System Requirements

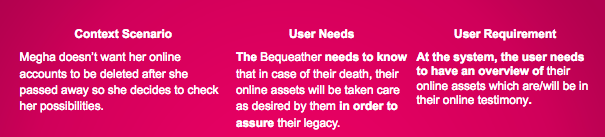

To further fine-tune our context scenarios, we interviewed five people from the target user groups. One of us would conduct the interview while another one would take down notes and record the audios (when allowed, of course). Based on each context scenario, we were able to clearly state concrete user needs which were then transformed into concrete user requirements. A user need was a goal of the user whereas a user requirement was a more solution-oriented statement that described how this need may be fulfilled. Finally, based on the user requirements, we were able to identify system requirements which we documented using the Volere Template. The requirements were refined throughout the entire process. Below are some examples of this.

Example of translation of a context scenario into a user need which were then translated into a user requirement

Example of a system requirement based on a user requirement and documented using Volere Template

We also refined our task model to reflect the user needs and requirements. Therefore, we now had a user-centric baseline for our prototypes!

Realisation Phase

The aim of this phase was to map the needs and requirements of our user's into a solution by means of visualising these requirements.

Storyboard

We started this phase by making a simple paper-based storyboard of our proposed interfaced. This was based on the results of the previous phase. This helped us:

Visualise the interface

Map all the user needs and requirements into a structured flow

Make sure all the use cases described in the task model were a part of the interface

Create a baseline to start prototyping

A sample of the storyboard. Certain parts of the image have been blurred out intentionally.

Paper Prototype - Phase I

We started the design process by creating a paper flipbook because:

Paper prototypes are quick to create

They require less effort

Easier to fix / improve based on user feedback

Honest feedback --> Participants don't feel as guilty criticising paper prototypes during testing, especially once you quickly "fix" something so they can see how easy it is to make changes to it

Can be easily discarded and be started again from scratch

We tested this prototype with seven users from the the target groups. The participants were simply informed that this was a website and were given full freedom to explore it. We then followed this up with a quick interview. We asked them questions like how they would describe the website, what did they like, what confused them, what they would change, which features would they add and remove. We received very helpful feedback from this session. The participants would even quickly sketch their ideas out to express themselves better. Armed with this information, we moved to refining the prototype.

Design Studio

Certain parts of the image have been blurred out intentionally.

Before improving the prototype, we decided to conduct a design studio session to get our creative juices flowing. We listed all the feedback before sketching and decided to focus our energy on the problem areas and fixing them. We then set away 10 minutes to independently sketch ideas that could solve these problems. The key points were:

No copying!

Make very quick sketches --> At least 10

Don't be too detail-oriented

Not necessary to include all the features in all the sketches

But the structure of the interface should be recognisable

After the 10 minutes, we presented our ideas to the group, critiqued and refined them for 15 to 20 minutes. Then we repeated all the steps again. After the third round, we gathered together for consensus building. This step was very critical in getting the entire group's ideas out onto paper and working together towards the solution

Paper Prototype - Phase II

Based on the ideas in the design studio, we were able to improve our paper prototype. We discarded the previous prototype and started fresh. This time, we tried to incorporate the new use cases we discovered through the previous testing session, which looked at solving several privacy and legal issues. We also tried to make this prototype more interactive. When the participant "clicked" on something, we would stick on any message they were supposed to see or take them to the next page. This prototype was already more dynamic compared to the previous one.

We tested this prototype with only two participants but we received a tonne of useful feedback. Sometimes basic things that we had somehow missed! We followed the same testing method as in the previous prototype testing session. Based on this second phase of prototype testing, we made 28 refinements in our design. We also added 8 new system requirements.

Certain parts of the image have been blurred outintentionally.

Certain parts of the image have been blurred out intentionally.

Medium-Fidelity Prototype

At this point, we had received enough validation to move forward with our design. But instead of jumping directly to creating a fully functional prototype, we chose instead to design a medium-fidelity prototype which would mimic functionality. We had the following options for tools:

- Static web page

- Reusable

- Higher effort

- Prototyping Tools

- Lower effort

- Limited capabilities

- Not reusable

We chose Balsamiq for prototyping purposes because although the designs would not be reusable, they would definitely be easier to modify. Balsamiq allowed us to create the prototype in a way that appeared to be functional. This was very helpful when we tested the product. When clicking on something produced no response, we informed them the functionality had not been added yet and asked them what they expected would have happened on clicking the element.

Certain parts of the image have been blurred out intentionally.

Usability Testing - Initial

We initially tested the product with 4 participants from a target user group as a test run. Before starting the test, they were informed that this was prototype of a future website mimicking functionality but it could not actually take any input from them. They were then asked to perform tasks from a pre-determined list. They were not provided any guidance when they were lost to see which areas of the prototype needed to be improved. We followed this up with an interview similar in nature to the one we used during the low-fidelity prototype testing. We made some fixes in the prototype based on this like change of wording where necessary.

Usability Testing - Main

This was the final step of our process. We now conducted the same test with 13 participants belonging to our target user groups. They were also asked to perform tasks from a pre-determined list. However, at the end, instead of holding an interview with them, we asked them to fill out the AttrakDiff questionnaire. This is a questionnaire developed to "evaluate the usability and design of an interactive product". On average, we received quite good feedback from the participants who answered this questionnaire. However, they were still some things to be improved but due to time-constraints we ended the project at this point. However, already by then, we had created a product that users found interesting, innovative, useful and most importantly, easy to use.

What did we do right?

We asked the wrong questions which lead us to the correct conclusions!

We incorporated lots of user, peer and stakeholder feedback which helped us create a product that users found useful and usable

We allowed users free exploration of the product as much as possible to needle out all possible flaws

We used open-ended questions in our interviews which was great for getting the conversation started and opening gates to user feedback

We iterated continuously - our requirements and our designs!

We tried to keep our design simple --> Complicated machines can be depressing

We made sure our documentation was thorough --> Our future selves were always grateful to our past selves ;)

What could we have done better?

We got too detailed in the very initial stages with personas and user roles --> This evolved over time but was very overwhelming in the beginning

We really should have refined individual personas even further, they were sometimes too repetitive

As a group of four computer scientists, we did sometimes lean towards getting too technical while defining user requirements instead of focusing on human requirements --> We did try to remedy this to a large extent though

We should definitely have used more concrete metrics while ranking the UIs of our competitors during competitive analysis

Our concept lacked a certain amount of clarity, something some users found lacking, mostly due to the usage of too fancy / technical terms in the product